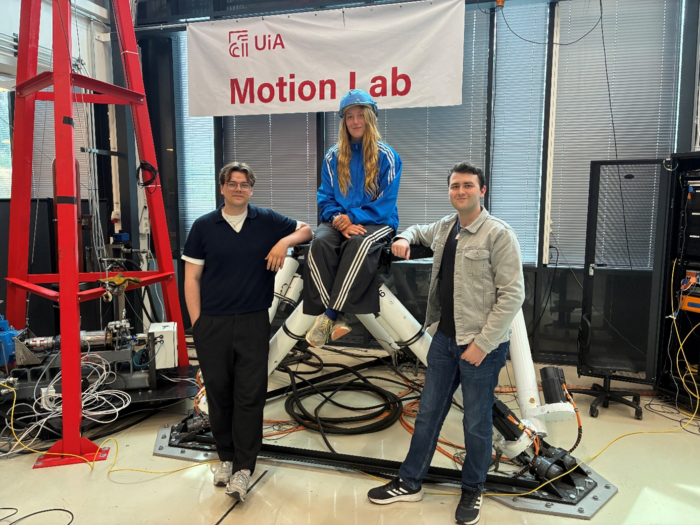

This students in research and innovation project, carried out by Elias Landro and Marcus Axelsen Wold, is a transdisciplinary collaboration between choreographer Karolina Bieszczad-Stie, dancer Ellinor Staurbakk, and the Motion Lab at the University of Agder.

The project combined mechatronics and contemporary dance to explore how modern dynamic machinery, specifically a Stewart Platform, can be used to choreograph unique dance performances. It ventured into motion control, system modeling, and real-time feedback, where each actuator’s extension follows precise dynamic calculations while responding to the dancer’s improvisation, creating a shared motion system between human and machine.

Background

The main goal of this project was to gain experience with choreographic interactions between the dancer and the Stewart Platform using the Qualisys motion capture system. It also aimed to prepare the equipment and software for this type of exploration while collecting material for an application to Kulturrådet to enable further collaboration on this topic.

This project combined two students in research and innovation projects:

• Choreographic Interactions with the Stewart Platform at UiA Motion Lab: Motion Reference Unit (MRU)

• Choreographic Interactions with the Stewart Platform at UiA Motion Lab: Qualisys Motion Capture

Initially, both the MRU and the Qualisys system were investigated, but it was decided early to focus on using the Qualisys system.

Objectives

The following student tasks were aimed at developing skills and competencies in mechatronics research:

• Operate the Stewart Platform safely during testing.

• Integrate and operate the Qualisys motion capture system to capture detailed data on platform and dancer movements.

• Investigate and test methods for dancer-controlled, real-time six-degree-of-freedom Stewart Platform movement.

Equipment

The experiments used a Rexroth E-Motion 1500 Stewart Platform, which can be controlled in real time using Beckhoff PLCs for communication, filtering, and platform control. The system could generate its own movement data or combine it with live sensor input.

For real-time human interaction, a Qualisys motion tracking system with eight cameras tracked specialized markers on the dancer, enabling the platform to match the dancer’s movements in six degrees of freedom during exploration.

Safety Challenges

Working with dynamic machines requires absolute precision to avoid risks. Measures were implemented in the control system and physical protection since the Stewart Platform can lift up to 1500 kg at high speeds.

A lab engineer helped establish two reliable safety layers to prevent dangerous sudden movements, and an accessible emergency stop was added to the human-machine interface (HMI).

The platform was programmed with movement patterns that supported the dancer’s stability, and real-time data input was limited to prevent abrupt, unpredictable movements. An important part of the safety strategy was the harness/crane setup, which added a safety layer while allowing freedom of movement, balancing safety regulations with artistic exploration.

Additionally, choreographic parameters like movement tempo and amplitude were refined with the dancer to support stability or intentional vulnerability within safe boundaries.

Results

Combining pre-generated data with real-time dancer movements provided flexibility to design multiple programs, including wave and earthquake simulations. These were created by generating time-varying sinusoidal numbers in real time, adjusting amplitude and frequency to reflect the desired movement patterns.

To enable dancer control, markers on a helmet were used so the dancer’s head movements could control the platform’s orientation through Euler angles, while a custom 3D-printed hand band with markers allowed the dancer’s hand movements to control the platform’s translational motion.

The final result was a 15-minute program where the dancer gradually gained more control:

1. Earthquake simulation (no dancer control)

2. Wave simulation (no dancer control)

3. Wave simulation (dancer head control)

4. Free movement (dancer head control)

5. Free movement (dancer hand control)

Overall, the project demonstrated how choreographic thinking and engineering logic can intersect, creating new forms of movement, control, and co-creation between human and machine.

Watch the 15-minute program on YouTube here: https://youtu.be/vV2iu7MQw6E

This video showcases the final structured performance sequence where the dancer gradually gains more control over the Stewart Platform, demonstrating the project’s outcomes.