This year marks our continued collaboration with the BioAcoustics team at the University of Agder, focusing on developing AI models to classify vocalizations from Atlantic cod. Compared to last year, the project expanded with new annotated recordings provided by Claudia Lacroix together with Victoria, and with valuable supervision from Professor Lei Jiao throughout the work on dataset handling, methodology, and model development.

A major development this year was transitioning from simplified recordings to a complex three year acoustic dataset consisting of eight hydrophone channels. Early in the project, severe noise issues were detected (including what initially appeared to be ground loop interference), and subsequent investigation revealed physical degradation in several hydrophones. This meant that reliable annotations were concentrated on only two of the eight channels, significantly reshaping our preprocessing pipeline and model design. Our work therefore expanded beyond typical AI experimentation: it required understanding how hardware faults propagate into acoustic features, how underwater noise masks vocalizations, and how such constraints shape the feasibility of long term passive acoustic monitoring. We also contributed to improving the dataset itself by examining labeling inconsistencies, identifying silence regions, and supporting refinements to annotation procedures.

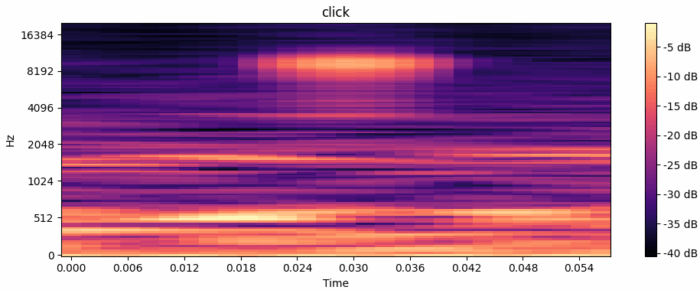

In parallel, we developed a full machine learning pipeline tailored for underwater bioacoustics. This included multi stage preprocessing, spectrogram generation, silence extraction, shifting window augmentation, group wise dataset splitting, and the design of a compact 16 layer ResNet architecture optimized for limited data. We also explored transformer based approaches and hybrid models combining waveform and spectrogram encoders, directions that remain promising for future work.